The Hidden Cost of Inconsistent Platform Governance

What we can learn from Amazon Marketplace sellers about trust erosion and how misconduct spreads on digital platforms.

Platform Papers is a blog about platform competition and Big Tech. Prominent academics discuss their latest research. The blog is linked to platformpapers.com, a repository that collects and organizes academic research on platform competition.

By Annabelle Gawer and Martín Harracá.

We’ve all heard the stories of the third-party seller on Amazon, the gig worker on Uber, or the content creator on TikTok who feels like they’re being treated unfairly by the platform they rely on. They follow all the rules, but their competitors get away with cheating, or the platform suddenly changes a policy that hurts their business. It can feel like a game where the house keeps moving the goalposts.

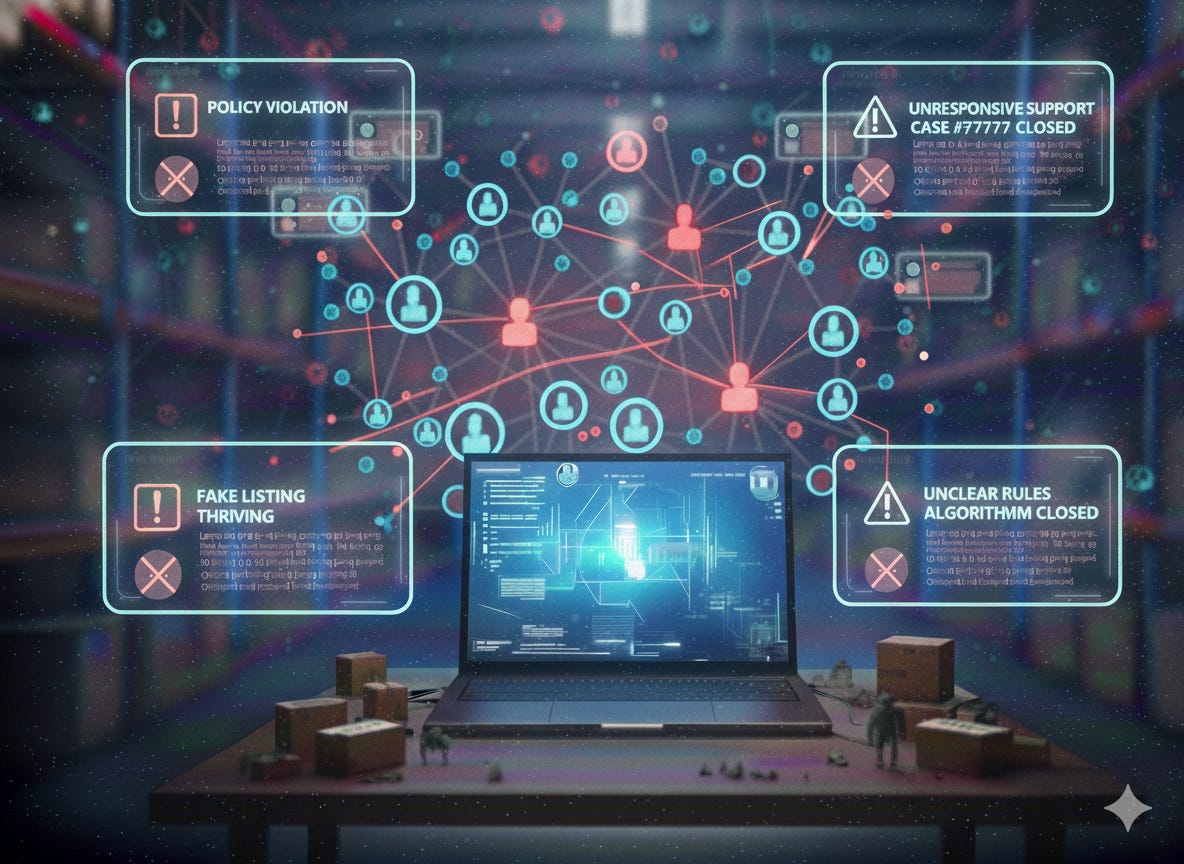

When sellers lose faith in the platform as a reliable enforcer, they take matters into their own hands, and the entire ecosystem suffers.

For a long time, academic research has focused on how platforms have enabled innovation and economic growth, while accumulating immense power. But what happens when that power is wielded inconsistently? Our study published in Research Policy explores this very issue. Based on an in-depth, qualitative analysis of Amazon’s Marketplace, we found that when platforms don’t enforce their own rules consistently, they’re not just creating a frustrating experience for sellers—they’re actively fostering an environment where misconduct becomes a survival strategy.

The disconnect in platform governance

Platforms often project a clear and consistent image of how they operate, including a declared, public-facing governance model. Amazon, for example, says it is committed to creating a “trustworthy environment” and that it invests heavily in preventing fraud and abuse. Its official rules, such as the Seller Code of Conduct, are designed to ensure fair play and a positive experience for everyone.

Platforms should be held accountable for implementing and transparently enforcing their own rules, providing clear, accessible policies and offering effective support channels staffed by people who can actually resolve issues.

However, our research reveals that sellers experience a significant gap between these declared practices and the platform’s actual operations. We found sustained discrepancies between what Amazon says and what it does. For instance:

Lack of support: Despite Amazon’s claims of having thousands of employees dedicated to helping sellers, many feel like actual support is limited, unresponsive, and often unhelpful. Sellers reported issues getting simple problems resolved and often hit a “black hole” when cases were passed between departments.

Unclear and changing rules: Sellers described a constant state of anxiety, trying to keep up with rules that are unclear and frequently change without warning. As one seller told us, “it constantly feels like you’re putting out fires”. This creates a sense of uncertainty, making it difficult for them to stay compliant.

Unreliable enforcement: The most significant issue, however, was a perception of inconsistent enforcement. Sellers complained that while they could be suspended for a minor infraction, blatantly fraudulent competitors seemed to go unpunished for significant violations such as selling fake or counterfeit goods, posting fake reviews, or even “hijacking” a competitor’s product listing.

From inconsistency to contagion

When sellers observe this gap between declared policy and lived experience, it triggers a chain reaction that we describe as a social contagion of misconduct.

Erosion of trust: Observing rule-breakers get away with cheating while they struggle to keep up with the rules made sellers feel unprotected and vulnerable. They started to believe that the platform was indifferent to their plight, which eroded their trust, turning what Amazon calls a “partnership” into what one seller described as a “master-servant relationship”.

The “legitimation” of misconduct: In this environment, sellers began to justify their own rule-breaking. As one seller put it, “If you are playing a board game and everyone’s cheating, you are probably going to start cheating”. For many, engaging in “Grey Hat” (bending rules) or even “Black Hat” (flagrant violations) tactics was no longer seen as a moral failing, but a necessary defensive strategy against unfair competition.

This is how misconduct spreads—not just from “bad apples” but from a system that incentivizes it. When sellers lose faith in the platform as a reliable enforcer, they take matters into their own hands, and the entire ecosystem suffers.

About the research: In an inductive qualitative study, we analyzed interviews with 46 Amazon sellers, providers, and employees, complemented by observations at nine seller events, online forums, and social media, and extensive archival data from 680 documents from 2012 to 2024. Our findings reveal that Amazon sellers exhibited a wide range of behaviors, from full compliance to repeated misconduct. We found that sellers experienced a significant discrepancy between Amazon’s publicly declared governance and its actual, inconsistent enforcement. This inconsistency erodes their trust and, in turn, legitimizes misconduct as a necessary response to what they perceive as a fundamentally unfair marketplace.

The path forward: Clarity and accountability

Our findings raise important questions for policymakers and platform leaders. The solution isn’t just to punish bad actors; it’s to address the underlying governance failures that enable them. Platform ecosystems, while solving mayor coordination problems, also and unavoidably lead to certain endogenous governance failures. We argue that inconsistent platform governance is yet another type of endogenous failure.

Platforms should be held accountable for implementing and transparently enforcing their own rules, providing clear, accessible policies and offering effective support channels staffed by people who can actually resolve issues. For regulators, this could mean mandating a legal framework that requires platforms to act as reliable, fair arbiters for their ecosystems, similar to how credit card companies handle disputes.

When platforms fail to govern their ecosystems with integrity, they risk a collapse of trust that can ultimately undermine their own foundations. A healthy platform economy requires more than just a clear set of rules; it demands platforms that enforce them consistently and fairly, with a keen understanding of the people whose livelihoods depend on them.

This post is based on research published in Research Policy and is included in the Platform Papers references dashboard:

Gawer, A., & Harracá, M. (2025). Inconsistent platform governance and social contagion of misconduct in digital ecosystems: A complementors perspective. Research Policy, 54(8), 105300.

Call-for-Papers: EU-DPRN 2026

The annual European Digital Platform Research Network (EU-DPRN) Summit brings together scholars across strategy, entrepreneurship, marketing, IS, and economics to advance research on digital platforms and ecosystems.

The 2026 summit will be held June 11–12 at Aalto University in Finland.

We invite full-paper submissions on all aspects of the platform economy, especially work examining the role of digital technologies—data, generative AI, and emerging information and innovation platforms—in shaping platform strategy.

Faculty should submit full working papers (~40 pages). PhD students may submit either full papers or shorter works (~6–10 pages) for the doctoral workshop.

The submission deadline is January 31, 2026, via the conference’s Google Form.

Platform Updates

Paramount disrupts Netflix’s studio pivot (1) (2) (3): Paramount’s surprise $108bn counter-offer for Warner Bros. Discovery challenges Netflix’s $83bn agreed takeover price. The outcome now rests on a volatile mix of shareholder interests and a shifting political climate regarding antitrust against Big Tech.

Google challenges Nvidia’s dominance with Gemini 3: Gemini 3, trained entirely on Google’s custom Tensor chips, is winning praise as a potential validation of vertical integration. This could signal a possible alternative to Nvidia, nudging hyper-scalers toward mixed-chip stacks that reduce vendor lock-in.

Zuckerberg pivots from metaverse to AI wearables: Meta plans to slash its Reality Labs budget by 30%, signaling a strategic retreat from immersive VR and a double-down on AI-integrated hardware. By shifting resources to products like Ray-Ban smart glasses, Meta aims to build a viable AI delivery platform.

OpenAI hits code red to defend its product moat: Pausing expansion into ads and retail, the company is pivoting resources to fix ChatGPT’s quality. Facing surging rivalry from Google and Anthropic, OpenAI aims to stem user churn and prevent switching before competitors’ infrastructure advantages erode its lead.

Apple rewires its C-suite for the AI era: The high-profile exits of leadership across AI, legal, policy, and operations at Apple signal a generational reset to break years of stagnation. By recruiting outsiders from Meta and Microsoft, Apple appears to be abandoning its insular culture to prioritize AI velocity and legal defense.

AI slop tests Reddit’s volunteer defense: Moderators across major subreddits report being overwhelmed by synthetic stories and rage-bait that evade detection. This influx creates a strategic crisis: as cheap AI generation outpaces human moderation capacity, Reddit risks losing the trust layer that defines its platform.

EA to build hybrid sports platform: The video game publisher’s $55B buyout may free EA from quarterly pressures, allowing a high-stakes pivot beyond gaming. Unshackled, the company can merge franchises like FC with live rights, betting, and GenAI—blurring the lines between playing, watching, and wagering.

Platform Papers is published, curated and maintained by Joost Rietveld.